User Tools

Sidebar

Table of Contents

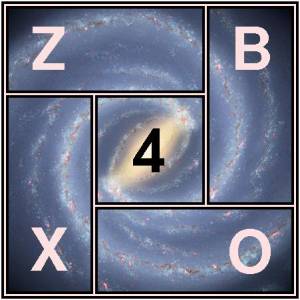

The zBox4 Supercomputer

The fourth generation of self-made supercomputer at the ITP represents the first real design update to the original zBox1 which was built in 2002. The number of cores and amount of memory was increased substantially over zBox3 and the very aging SCI Network replaced by QDR Infiniband. From the 576 cores (Intel Core2) and 1.3 TB RAM of zBox3, we now have 3072 cores (Intel Sandy Bridge E5) and 12 TB of RAM. While zBox3 was an upgrade of the main boards, CPUs and memory without making any design changes, zBox4 involved completely redesigning the platters which now each hold 4 nodes. The special rack which houses these platters was also improved with addition of a special nozzle to improve airflow and cleaning up the way cables are routed.

Planning for zBox4 began in the Spring of 2011, but these plans were partially scrapped in the late Fall of 2011 to await the arrival the Intel Sandy Bridge E5 chip with 8 cores and 4 memory channels per chip and main boards to support it. Serious planning began again in the Spring of 2012 and construction began end of September 2012. The dismantling of zBox3 and the construction of zBox4 was performed by volunteer students, postdocs and friends of the Institute, with even a few professors lending a helping hand.

Specifications

Hardware

- CPUs: 384 Intel Xeon E5-2660 (8 cores @ 2.2 GHz, 95 W), 3072 cores in total

- Main Boards: 192 Supermicro X9DRT-IBQF (2 CPUs per node) on-board QDR Infiniband

- RAM: Hynix DDR3-1600, 4 GB/core, 64 GB/node, 12.3 TB in total

- SSD: 192 OCZ 128 GB high performance Vertex 4 drives, 24.6 TB in Total

- HPC Network: QLogic/Intel QDR Infiniband in 2:1 fat tree (9 leaf and 3 core switches)

- Gbit Ethernet and dedicated 100 Mbit management networks

- Power usage (full load): 44 kW

- Dimensions (L x W x H): 1.5m x 1.5m x 1.7m

- Number of Cables: Power: 112 IB: 300 Ethernet: 388

- Cost: under 750'000 CHF

System Configuration

- OS: Scientific Linux version 6.3

- Queue System: Slurm

- Swap: 8 GB on node-local SSD drive

- Temp Files: 110 GB on node-local SSD drive

- Booting: from node-local SSD, or over Ethernet

Storage System (existing)

- Capacity: 684 TB formatted Raid-6

- Lustre file system with 50 OSTs

- 342 x 1.5 TB HDD and 171 x 2.0 TB HDD

- Physical dimensions: 48 standard rack units

- 10 Gb Ethernet and 40 Gb (QDR) Infiniband

- 3 Controllers using Intel E5645 2.4 GHz CPUs

Tape Robot:

- Capacity: 800 TB, 437 tape slots

- 4 x LTO-5 drives and 2 x LTO-3 drives

- 54 TB high speed tape cache (108 TB raw storage)

- 40 Gb (QDR) Infiniband connected

Design

Construction

"First Light"

October 26, 2012 running on 189 of 192 nodes (3 sent for RMA)

Thanks to

Doug Potter : co-designer and without whom this would not be possible!

Jonathan Coles : for helping oversee the construction, cabling and getting under the floor, and making the most amazing movie!

Elena Biesma (Supermicro) : for her continuous help and for organizing the special production of the motherboards for the project!

All who helped build it:

Donnino Anderhalden, Ray Angelil, Rebekka Bieri, Andreas Bleuler, Michael Busha, Fabio Cascioli, Jonathan Coles, Sebastian Elser, Davide Fiacconi, Andreu Font, Lea Giordano, Simon Grimm, Volker Hoffmann, Felix Huber, Cédric Huwyler, Mohammed Irshad, Matthieu Jaquier, Rafael Kueng, Davide Martizzi, Ben Moore, Christine Corbett Moran, Doug Potter, Darren Reed, Rok Roskar, Prasenjit Saha, Lorenzo Tancredi, Romain Teyssier, Erich Weichs, José Francisco Zurita

all done in 24 hours, great work!

Reto Meyer (UZH Physics Workshop) : metal work for prototypes and making the final drawings of the platters.

To all the Suppliers:

- Levantis AG (Marco Peyer) and Supermicro (Elena Biesma): Motherboards, memory, power

- Dalco (Christian and Franklin Dallman): CPUs

- OHC (Roland Csillag): Infiniband, Solid State Drives, miscellaneous

- Lerch AG (Rolf Weber): Laser cutting and construction of metal parts

- Sibalco : Custom parts for mounting components

Funding, etc.

SNF (Swiss National Science Foundation) for funding (R'Equip grant).

Faculty of Science at the University of Zurich, MNF (Prof. Daniel Wyler) for funding.

Markus Kurmann at the University of Zurich for handling the public tender for the CPUs.